Robot, can you say ‘Cheese’?

Columbia engineers build Emo, a silicon-clad robotic face that makes eye contact and uses two AI models to anticipate and replicate a person’s smile before the person actually smiles -- a major advance in robots predicting human facial expressions accurately, improving interactions, and building trust between humans and robots.

Media Contact

Holly Evarts, Director of Strategic Communications and Media Relations 347-453-7408 (c) | 212-854-3206 (o) | holly.evarts@columbia.edu

About the Study

JOURNAL: Science Robotics

TITLE: “Human-Robot Facial Co-expression”

AUTHORS: Yuhang Hu (1); Boyuan Chen (2, 3, 4); Jiong Lin (1); Yunzhe Wang (5); Yingke Wang (5); Cameron Mehlman (1); and Hod Lipson (1, 6)

- Creative Machines Laboratory, Mechanical Engineering Department, Columbia University,

- Mechanical Engineering and Materials Department, Duke University

- Electrical and Computer Engineering Department, Duke University

- Computer Science Department, Duke University

- Computer Science Department, Columbia University

- Data Science Institute, Columbia University

FUNDING: The study was supported by the National Science Foundation AI Institute for Dynamical Systems (DynamicsAI.org ) grant 2112085, and an Amazon grant through the Columbia Center of AI Technology (CAIT).

The authors declare that they have no competing interests.

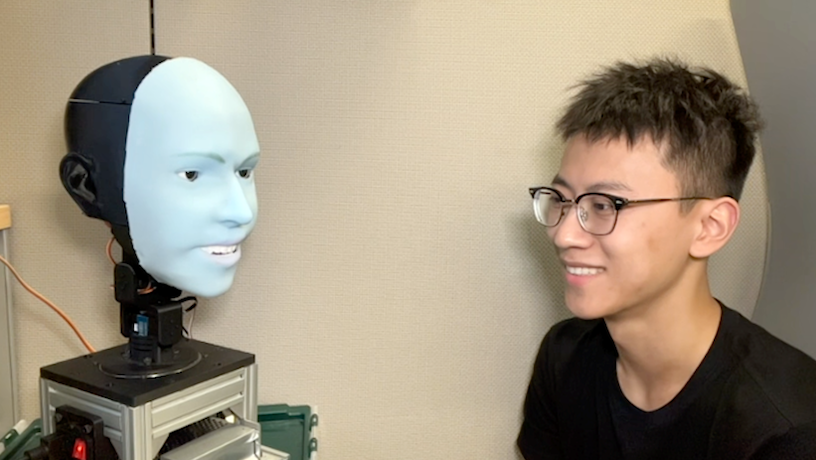

The study's lead author, Yuhang Hu, a PhD student at Columbia Engineering in Hod Lipson’s lab. Credit: John Abbott/Columbia Engineering

What would you do if you walked up to a robot with a human-like head and it smiled at you first? You’d likely smile back and perhaps feel the two of you were genuinely interacting. But how does a robot know how to do this? Or a better question, how does it know to get you to smile back?

While we’re getting accustomed to robots that are adept at verbal communication, thanks in part to advancements in large language models like ChatGPT, their nonverbal communication skills, especially facial expressions, have lagged far behind. Designing a robot that can not only make a wide range of facial expressions but also know when to use them has been a daunting task.

Tackling the challenge

The Creative Machines Lab at Columbia Engineering has been working on this challenge for more than five years. In a new study published today in Science Robotics, the group unveils Emo, a robot that anticipates facial expressions and executes them simultaneously with a human. It has even learned to predict a forthcoming smile about 840 milliseconds before the person smiles, and to co-express the smile simultaneously with the person.

The team, led by Hod Lipson, a leading researcher in the fields of artificial intelligence (AI) and robotics, faced two challenges: how to mechanically design an expressively versatile robotic face which involves complex hardware and actuation mechanisms, and knowing which expression to generate so that they appear natural, timely, and genuine. The team proposed training a robot to anticipate future facial expressions in humans and execute them simultaneously with a person. The timing of these expressions was critical -- delayed facial mimicry looks disingenuous, but facial co-expression feels more genuine since it requires correctly inferring the human's emotional state for timely execution.

Yuhang Hu of Creative Machines Lab face-to-face with Emo. Image: Courtesy of Creative Machines Lab

How Emo connects with you

Emo is a human-like head with a face that is equipped with 26 actuators that enable a broad range of nuanced facial expressions. The head is covered with a soft silicone skin with a magnetic attachment system, allowing for easy customization and quick maintenance. For more lifelike interactions, the researchers integrated high-resolution cameras within the pupil of each eye, enabling Emo to make eye contact, crucial for nonverbal communication.

The team developed two AI models: one that predicts human facial expressions by analyzing subtle changes in the target face and another that generates motor commands using the corresponding facial expressions.

To train the robot how to make facial expressions, the researchers put Emo in front of the camera and let it do random movements. After a few hours, the robot learned the relationship between their facial expressions and the motor commands -- much the way humans practice facial expressions by looking in the mirror. This is what the team calls “self modeling” – similar to our human ability to imagine what we look like when we make certain expressions.

Then the team ran videos of human facial expressions for Emo to observe them frame by frame. After training, which lasts a few hours, Emo could predict people’s facial expressions by observing tiny changes in their faces as they begin to form an intent to smile.

“I think predicting human facial expressions accurately is a revolution in HRI. Traditionally, robots have not been designed to consider humans' expressions during interactions. Now, the robot can integrate human facial expressions as feedback,” said the study’s lead author Yuhang Hu, who is a PhD student at Columbia Engineering in Lipson’s lab. “When a robot makes co-expressions with people in real-time, it not only improves the interaction quality but also helps in building trust between humans and robots. In the future, when interacting with a robot, it will observe and interpret your facial expressions, just like a real person.”

Go inside the Creative Machines Lab to watch Emo's facial co-expression. Video Credit: Yuhang Hu, Columbia Engineering

What’s next

The researchers are now working to integrate verbal communication, using a large language model like ChatGPT into Emo. As robots become more capable of behaving like humans, Lipson is well aware of the ethical considerations associated with this new technology.

“Although this capability heralds a plethora of positive applications, ranging from home assistants to educational aids, it is incumbent upon developers and users to exercise prudence and ethical considerations,” says Lipson, James and Sally Scapa Professor of Innovation in the Department of Mechanical Engineering at Columbia Engineering, co-director of the Makerspace at Columbia, and a member of the Data Science Institute. “But it’s also very exciting -- by advancing robots that can interpret and mimic human expressions accurately, we're moving closer to a future where robots can seamlessly integrate into our daily lives, offering companionship, assistance, and even empathy. Imagine a world where interacting with a robot feels as natural and comfortable as talking to a friend.”